hadoop + spark 学习笔记

以下内容仅为个人学习笔记,不具备权威性,旨在个人学习和理解过程中的记录和总结。内容可能包含个人见解和解释,仅供参考,读者在应用这些信息时应进行独立思考和验证。在正式场景或专业场合引用前,请确保参考权威和官方发布的资料。

服务器环境

DISTRIB_ID=Ubuntu

DISTRIB_RELEASE=24.04

DISTRIB_CODENAME=noble

DISTRIB_DESCRIPTION=”Ubuntu 24.04 LTS”

Linux zj 6.8.0-35-generic #35-Ubuntu SMP PREEMPT_DYNAMIC Mon May 20 15:51:52 UTC 2024 x86_64 x86_64 x86_64 GNU/Linux

下载 hadoop 3.3.6

1

| https://hadoop.apache.org/release/3.3.6.html

|

Download tar.gz

拷贝 hadoop-3.3.6.tar.gz 到服务器

安装 hadoop

先决条件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| Supported Java Versions

Apache Hadoop 3.3 and upper supports Java 8 and Java 11 (runtime only)

$ sudo apt-get install openjdk-11-jdk

$ sudo apt-get install ssh

$ sudo apt-get install pdsh

systemctl stop ufw.service

systemctl disable ufw.service

useradd -r -m -s /bin/bash hadoop

passwd hadoop

export VISUAL=vim

sudo -E visudo

hadoop ALL=(ALL) ALL

|

解压

1

2

3

| sudo tar -xzvf hadoop-3.3.6.tar.gz

sudo mv hadoop-3.3.6 /usr/local/hadoop

sudo chown -R hadoop:hadoop /usr/local/hadoop

|

配置JAVA_HOME

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

root@zj:~

lrwxrwxrwx 1 root root 22 Apr 17 14:23 /usr/bin/java -> /etc/alternatives/java

root@zj:~

lrwxrwxrwx 1 root root 43 Apr 17 14:23 /etc/alternatives/java -> /usr/lib/jvm/java-11-openjdk-amd64/bin/java

vim /usr/local/hadoop/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64

/usr/local/hadoop/bin/hadoop

export HADOOP_HOME=/usr/local/hadoop

export PATH=$HADOOP_HOME/bin:$PATH

|

配置hadoop (伪分布式)

修改 etc/hadoop/core-site.xml 如下配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/hadoopdata</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>hadoop</value>

</property>

</configuration>

|

修改 etc/hadoop/hdfs-site.xml 如下配置

1

2

3

4

5

6

7

|

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

|

设置 ssh 无密码登录 localhost

1

2

3

4

5

6

| ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

# 测试登录

ssh localhost

|

格式化文件系统:

1

| $ bin/hdfs namenode -format

|

启动 NameNode 守护程序和 DataNode 守护程序:

无报错表示配置成功

hadoop 守护程序日志输出将写入目录(默认为 )。$HADOOP_LOG_DIR``$HADOOP_HOME/logs

浏览 NameNode 的 Web 界面;默认情况下,它位于http://ip:9870/

HDFS 文件浏览 上传 删除

web 界面操作

命令行

1

2

3

| hdfs dfs -mkdir xxx

hdfs dfs -put xxx xxx

hdfs dfs -ls xx

|

YARN 作业配置

etc/hadoop/mapred-site.xml:

1

2

3

4

5

6

7

8

9

10

11

|

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

</configuration>

|

ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789_{}

etc/hadoop/yarn-site.xml:

1

2

3

4

5

6

7

8

9

10

11

|

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME,PATH,LANG,TZ,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

|

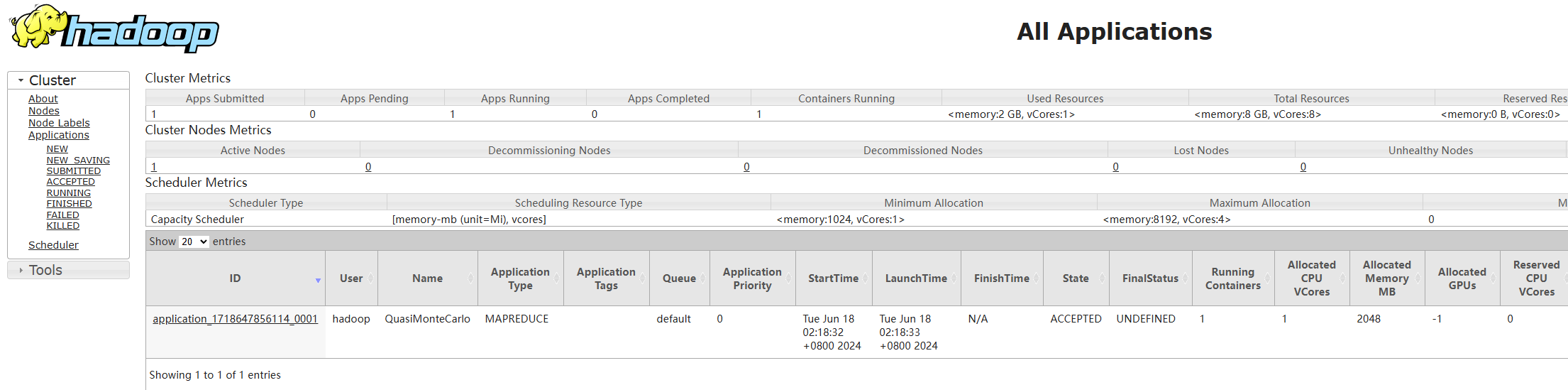

启动 ResourceManager 守护程序和 NodeManager 守护程序:

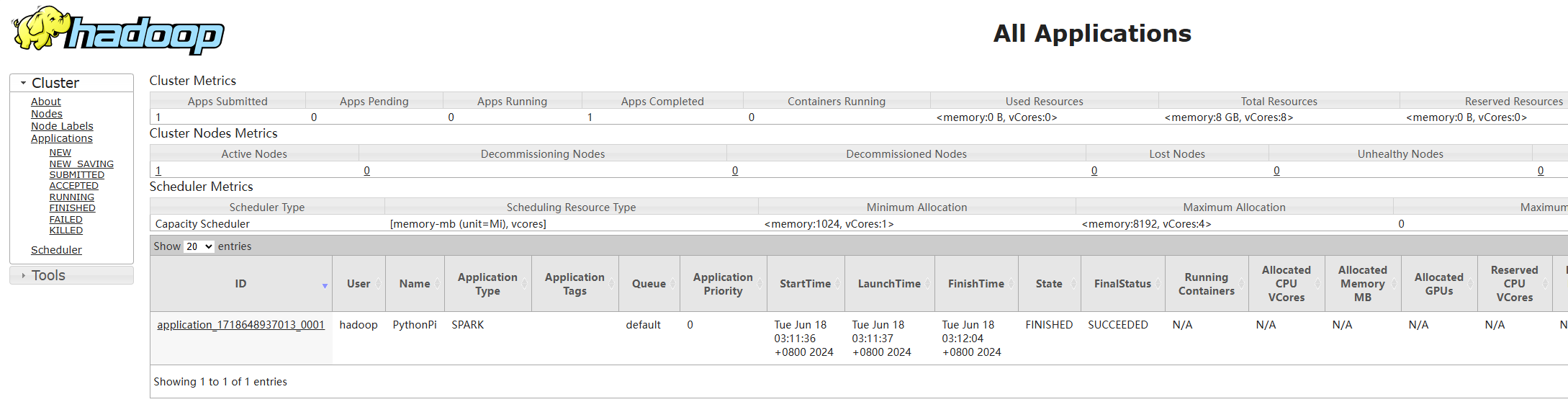

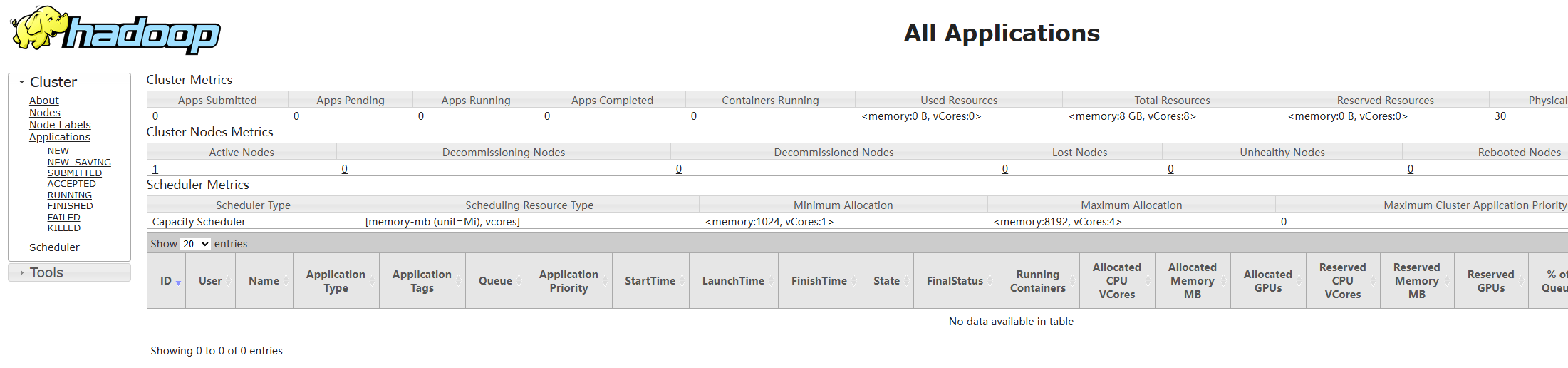

浏览 ResourceManager 的 Web 界面;默认情况下,它位于:http://ip:8088/

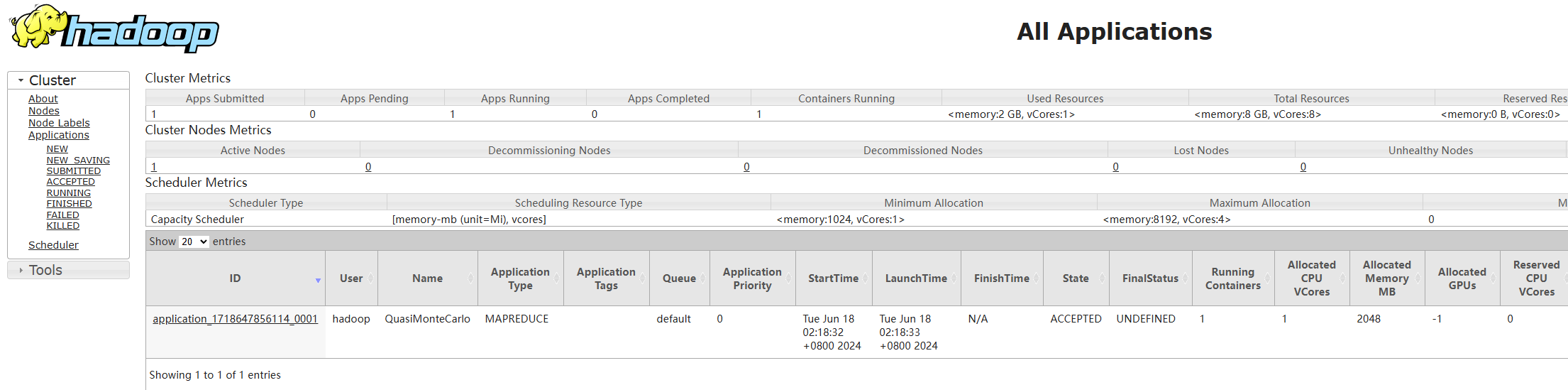

测试作业

1

| hadoop jar hadoop-mapreduce-examples-3.3.6.jar pi 10 50

|

输出 Estimated value of Pi is 3.16000000000000000000 表示成功

同时web 出现作业记录

安装spark

1

2

3

| sudo mkdir /usr/local/spark

sudo mv spark-3.5.1-bin-hadoop3/* /usr/local/spark

sudo chown -R hadoop:hadoop /usr/local/spark

|

修改配置

spark-env.sh:

1

2

3

4

5

6

7

8

| cp /usr/local/spark/conf/spark-env.sh.template /usr/local/spark/conf/spark-env.sh

vim /usr/local/spark/conf/spark-env.sh

export SPARK_DIST_CLASSPATH=$(/usr/local/hadoop/bin/hadoop classpath)

HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop/

YARN_CONF_DIR=/usr/local/hadoop/etc/hadoop/

|

验证

1

2

| cd /usr/local/spark

bin/run-example SparkPi

|

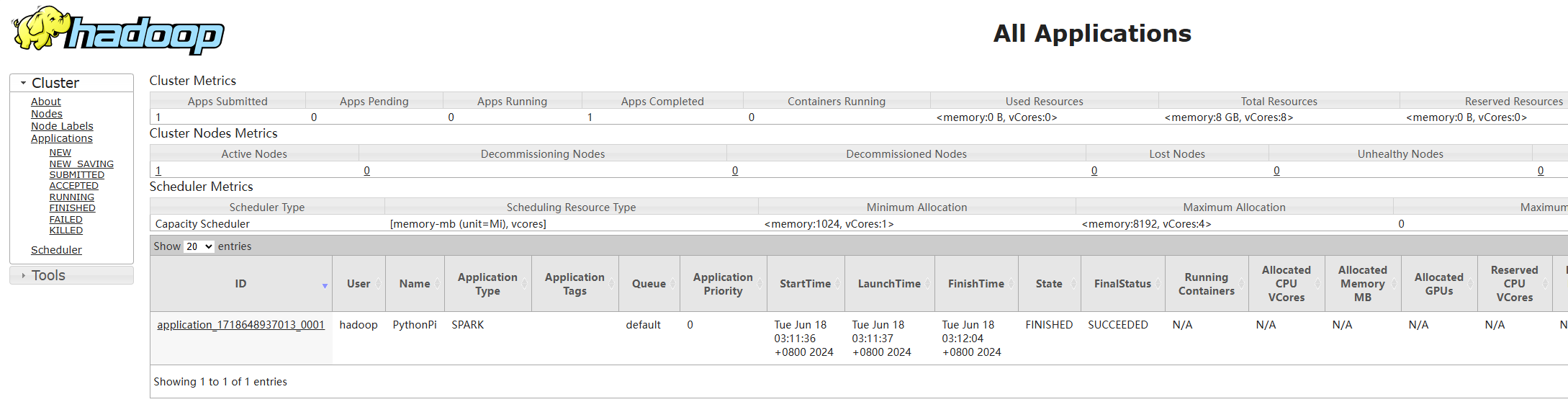

验证 spark on yarn

1

| bin/spark-submit --master yarn --deploy-mode client --driver-memory 512m --executor-memory 512m --num-executors 2 --total-executor-cores 2 /usr/local/spark/examples/src/main/python/pi.py 10

|